Microserver Build

Tiny server build with a Optiplex 7060 and XCP-ng.

This post walks through some interesting parts of my home-lab server build using a repurposed Dell OptiPlex micro-pc using the XCP-ng virtualization platform.

The Hardware

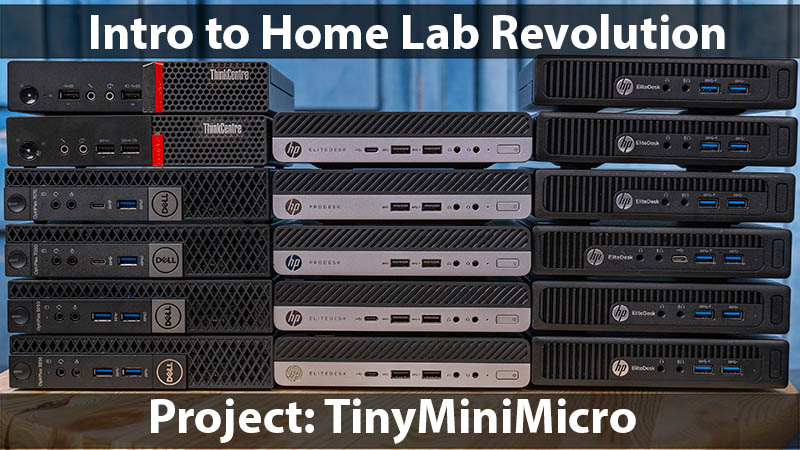

I acquired a micro-sized Dell OptiPlex 7060 for this project from Facebook Marketplace. HP, Dell, and Lenovo all have awesome tiny machines in this form factor. These machines are often used in Enterprises, where the hardware refresh cycle means that used units are commonly available on second-hand markets like Facebook Marketplace, eBay, etc.

And using these machines in this way is not novel... ServeTheHome gave a great introduction to them a few years ago.

My new little machine has room for two SSDs (one NVMe drive and one 2.5" SATA drive). I picked up 4TB versions of each to give this little machine 8TB of total storage (or 4TB if I were a responsible human and mirrored the drives).

I also maxed out this machine's RAM at 32GB because nobody has complained about having too much RAM in a VM host and, unlike CPU capacity, you can't overprovision RAM with XCP-ng.

Oh, and one more thing: I've also repurposed an old Raspberry Pi Zero W, which is used for my encrypted boot (more on that later).

The Software

I wanted this machine to host virtual machines. Previously, I would probably have tried to get VMware ESXi running on it, but Broadcom's acquisition of VMWare killed their free hobbyist offering. It's time to look at new options.

I briefly considered throwing plain-old Linux on this machine and using KVM/LXC. I opted not to take this path because it's too finicky. I wanted a point-and-click appliance where I could easily spin up/adjust VMs as new projects arise rather than having to remember the CLI incantations that I'd need under Linux. This is especially true because my network configuration is non-trivial and heavily uses VLANs and segmentation.

Another option I considered was Proxmox VE, which is quite popular among hobbyists. I've tried Proxmox before, and it never clicked for me. Last time I checked, coping with my complicated network setup required me to drop to the CLI.

So, I settled on XCP-ng based on the enterprise-grade but open-source XEN hypervisor.

XCP-ng: the user-friendly, high-performance virtualization solution, developed collaboratively for unrestricted features and open-source accessibility.

Sounds great! For me, a few of the highlights of XCP-ng were:

- Open-source and based on the rock-solid Citrix Xen hypervisor.

- The great web-based admin UI (Orchestra) is installed separately and can manage multiple VM hosts.

- A great CLI is available that I can use to administer the machine remotely via. ssh (incidentally

xsconsoleis a nice little default terminal UI that lets you start/stop VMs without remembering the more complex commands) - Support for backups, automated snapshots, etc.

- Lots of enterprise-type features to grow into (live migrations, HA, etc.)

- Import from VMware!

The installation itself was straightforward and well-covered in the official documentation.

The Orchestra

You can configure/manage XCP-ng through the CLI (handy in a pinch, but not what I want for my day-to-day). Fortunately, there is a great front-end for XCP-ng called Xen Orchestra. Xen Orchestra is a commercial offering from the company (Vates Virtualization) that manages XCP-ng but can be used for free with limited capabilities. Alternatively, you can install Xen Orchestra Community for free, with fewer (but different) restrictions.

Upon the first installation of XCP-ng and hitting http://my-new-server-ip, there is a push-button installation for XOA (the Xen Orchestra Appliance) that gets up and running quickly.

Since this is a homelab, and I won't be pursuing commercial support for this environment, I opted to go with the community version. Fortunately, a ready-to-go Docker Container gets you up and running in about two seconds!

docker-compose.yml, etc. that I used to setup my Orchestra service exposed only on my Tailscale Tailnet. I used this, rather than what I had below, because I can easily administer XCP-ng from any of my Tailnet connected machines.I cheated and ran this on a different Docker host, which I then used to provision a new Ubuntu VM on my new server. I installed Docker on that new VM, deployed the Docker container for XO on that host, tested it out, and then destroyed my original XO container.

curl https://get.docker.com | sh

mkdir -p /docker/orchestraThen created /docker/orchestra/docker-compose.yml

version: '3'

services:

xen-orchestra:

restart: unless-stopped

image: ronivay/xen-orchestra:latest

container_name: xen-orchestra

stop_grace_period: 1m

ports:

- "80:80"

environment:

- HTTP_PORT=80

cap_add:

- SYS_ADMIN

- DAC_READ_SEARCH

security_opt:

- apparmor:unconfined

volumes:

- xo-data:/var/lib/xo-server

- redis-data:/var/lib/redis

logging: &default_logging

driver: "json-file"

options:

max-size: "1M"

max-file: "2"

devices:

- "/dev/fuse:/dev/fuse"

- "/dev/loop-control:/dev/loop-control"

- "/dev/loop0:/dev/loop0"

volumes:

xo-data:

redis-data:

And voila. I have the community edition of Xen Orchestra up and running!

Creating Networks (VLANs)

As mentioned earlier, I have my network segmented into roughly:

- LAN for family (keep my kid's sketchy Minecraft mods away from my systems)

- SECURE LAN for me

- MANAGEMENT for switch configuration, server management ports, and VM management front-ends

- DMZ for untrusted Internet-facing services

- GUEST for guests

I have separate wireless networks for most of those and use VLANs to isolate traffic between networks. Fortunately, adding these networks to XCP-ng is trivial.

Now, when creating a VM, I can choose which network the VM is tied to:

Easy peasy!

Importing from VMWare

A slick feature of XCP-ng, which is particularly relevant for me as I migrate away from VMware, is their Import from VMWare feature. It does what you'd expect. You give it credentials to your VMWare instance, which will let you pick VM(s) to pull across. Note that on XOA, this functionality requires a paid license, but it is available for free under XO Community.

We can see progress on our Tasks page. I've become spoiled with the 10Gig on my big servers. It's time for some ☕...

Once the VM is copied, a couple of things need some love:

- The Ethernet device changed from

ens160toeth0so the newly booted VM doesn't initially connect to the network. This is an easy fix. Just adjust/etc/netplan/00-installer-config.yamland swapeth0forens160and runnetplan apply. - The migrated VM doesn't have the "XCP-ng Guest Tools" installed, but this is also a quick fix.

- Double-check the resources allocated to the VM (CPUs, memory, etc.)

Encrypted Storage

These days, I don't deploy a computer without disk encryption. For this server, I want disk encryption for two primary reasons:

- It eliminates the problem where "Future Jeff" forgets what was installed on these drives and recycles/uses them elsewhere, potentially inadvertently exposing my data.

- This server is cute and tiny. It seems like something that could potentially "wander off." It would be annoying if someone stole my server, but I would rather not worry about its data.

Unfortunately, XCP-ng doesn't yet support full disk encryption natively, but it's not too difficult to get it up and running (only slightly voiding the warranties).

This machine will run headless, so prompting for a password on boot would be annoying. In the past, I've used a USB thumb drive as a sort of ignition key, but the temptation is to leave that in the machine (so I can reboot it remotely), and that defeats the purpose for use-case #2 (the machine gets stolen).

Fortunately, an awesome technology called Network Bound Disk Encryption is exactly what I want. The nutshell is that I have a machine on my network that hands out encryption keys to other devices. This means as long as my server is on my network (or a network with that other device), it'll boot automatically, but if someone "relocates" my server, that key server won't be available, and the drives will be locked. Sticking these two machines right next to each other probably defeats the purpose. Still, a Raspberry Pi is just small enough that you can stash it somewhere else where it will hopefully avoid notice from server-swiping hooligans.

I did a fresh Ubuntu install (incidentally, the Raspberry Pi Imager is awesome these days and has the option to set up wifi and initial users when writing the image!) on my Raspberry Pi, logged into the console, and installed/enabled Tang. This service will be handing out keys to my network-attached servers.

apt install tang

systemctl enable tangd.socket

systemctl start tangd.socket/var/lib/tang.Now, on our XCP-ng machine... SSH into it...

First, we'll install Clevis (a pluggable framework for automated decryption).

yum --enablerepo=base install clevis-dracut clevis-luks

systemctl enable clevis-luks-askpass.path/dev/nvm0) and also selected that for VM storage. During install /dev/sda was ignored. In my environment, this created a partition /dev/nvme0n1p3 (~3TB) and I'll be using that for the remainder. Make sure to double check that your partitions / devices are correct!Next, we'll initialize the two drives /dev/sda/ and /dev/nvme0n1p3. Make sure to pick a strong password and record it somewhere. This is the fallback if Tang fails!

cryptsetup luksFormat /dev/sda

cryptsetup luksFormat /dev/nvme0n1p3Now, we'll use Clevis to bind each drive to our Tang server (replace 999.999.999.999 with the static IP for your Tang server).

clevis luks bind -d /dev/sda tang '{"url": "http://999.999.999.999"}'

clevis luks bind -d /dev/nvme0n1p3 tang '{"url": "http://999.999.999.999"}'This would set up two Tang servers, and any one of them is sufficient:

clevis luks bind -d /dev/sda sss '{"t": 1, "pins": {"tang": [{"url": "http://999.999.999.1"}, {"url": "http://999.999.999.2"}]}}'This would set up three Tang servers and require keys from any two of them:

clevis luks bind -d /dev/sda sss '{"t": 2, "pins": {"tang": [{"url": "http://999.999.999.1"}, {"url": "http://999.999.999.2"}, {"url": "http://999.999.999.3"}]}}'And finally, we need the underlying device IDs for the next step:

blkid /dev/sda

blkid /dev/nvme0n1p3Now create /etc/crypttab and fill it with:

crypt_sda UUID=##ID FOR SDA## none _netdev

crypt_nvme UUID=##ID FOR NVME## none _netdevAt this point, you should be able to reboot, and if you look in /dev/mapper you'll see, among other things, your new crypt_nvme and crypt_sda devices.

[20:55 marmot ~]# ls /dev/mapper

control crypt_nvme crypt_sdaAt this point, I could create two SRs (pointing to /dev/mapper/crypt_nvme and /dev/mapper/crypt_sda), but I'd like to lash them together and make one larger 8TB volume.

I spent ages trying to make this work: manually creating the LVM VG/LG with the two drives and failing to have it recognized at boot. XCP-ng really doesn't like you messing around with LVM by hand.

Fortunately, there is an easy answer. Use XCP-ng Orchestra/CLI to create an SR with just one of the drives and then extend the VG by adding the second. Here is what I did:

# Create the SR with a single drive

xe sr-create type=ext content-type=user name-label="Primary" device-config:device=/dev/mapper/crypt_nvme

# Make sure sda is freshly initialized

pvcreate /dev/mapper/crypt_sda

# Get the Volume Group Name [VG] for the newly created SR VG ("XSLocalEXT-...")

pvs | grep crypt_nvme

# Add SDA to our new VG

vgextend [VG] /dev/mapper/crypt_sda

# Get the name of the [LV] on our volume group

lvdisplay /dev/[VG]

# Extend the LV

lvextend -l +100%FREE /dev/[VG]/[LV]

# And Extend the EXT filesystem

resize2fs /dev/[VG]/[LV]I will follow up with a reboot to ensure everything comes back online properly.

If that was a bit hard to follow, here is the log of steps/results from my machine:

[07:56 marmot ~]# xe sr-create type=ext content-type=user name-label="Primary" device-config:device=/dev/mapper/crypt_nvme

4ee2023e-48e4-0453-ccbf-6673c7a4fb6b

[07:57 marmot ~]# pvcreate /dev/mapper/crypt_sda

Physical volume "/dev/mapper/crypt_sda" successfully created.

[07:57 marmot ~]# pvs | grep crypt_nvme

/dev/mapper/crypt_nvme XSLocalEXT-4ee2023e-48e4-0453-ccbf-6673c7a4fb6b lvm2 a-- <3.60t 0

[07:57 marmot ~]# vgextend /dev/XSLocalEXT-4ee2023e-48e4-0453-ccbf-6673c7a4fb6b /dev/mapper/crypt_sda

Volume group "XSLocalEXT-4ee2023e-48e4-0453-ccbf-6673c7a4fb6b" successfully extended

[07:57 marmot ~]# lvdisplay /dev/XSLocalEXT-4ee2023e-48e4-0453-ccbf-6673c7a4fb6b

--- Logical volume ---

LV Path /dev/XSLocalEXT-4ee2023e-48e4-0453-ccbf-6673c7a4fb6b/4ee2023e-48e4-0453-ccbf-6673c7a4fb6b

LV Name 4ee2023e-48e4-0453-ccbf-6673c7a4fb6b

VG Name XSLocalEXT-4ee2023e-48e4-0453-ccbf-6673c7a4fb6b

LV UUID e6fPw0-vJct-JKxF-OHQc-Jno1-hfiK-YI15I1

LV Write Access read/write

LV Creation host, time marmot, 2024-11-04 07:57:08 -0700

LV Status available

# open 1

LV Size <3.60 TiB

Current LE 943234

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:3

[07:57 marmot ~]# lvextend -l +100%FREE /dev/XSLocalEXT-4ee2023e-48e4-0453-ccbf-6673c7a4fb6b/4ee2023e-48e4-0453-ccbf-6673c7a4fb6b

Size of logical volume XSLocalEXT-4ee2023e-48e4-0453-ccbf-6673c7a4fb6b/4ee2023e-48e4-0453-ccbf-6673c7a4fb6b changed from <3.60 TiB (943234 extents) to <7.24 TiB (1897095 extents).

Logical volume XSLocalEXT-4ee2023e-48e4-0453-ccbf-6673c7a4fb6b/4ee2023e-48e4-0453-ccbf-6673c7a4fb6b successfully resized.

[07:58 marmot ~]# resize2fs /dev/XSLocalEXT-4ee2023e-48e4-0453-ccbf-6673c7a4fb6b/4ee2023e-48e4-0453-ccbf-6673c7a4fb6b

resize2fs 1.47.0 (5-Feb-2023)

Filesystem at /dev/XSLocalEXT-4ee2023e-48e4-0453-ccbf-6673c7a4fb6b/4ee2023e-48e4-0453-ccbf-6673c7a4fb6b is mounted on /run/sr-mount/4ee2023e-48e4-0453-ccbf-6673c7a4fb6b; on-line resizing required

old_desc_blocks = 461, new_desc_blocks = 927

The filesystem on /dev/XSLocalEXT-4ee2023e-48e4-0453-ccbf-6673c7a4fb6b/4ee2023e-48e4-0453-ccbf-6673c7a4fb6b is now 1942625280 (4k) blocks long.

[07:58 marmot ~]# reboot

Now, my data is safe. The drives storing my VM images are encrypted (ensuring that if a drive is lost/stolen/repurposed, the data on that drive is unusable). The server will automatically unlock the drives on boot if the server is on my network and my Tang server is up and running (with the password being a fallback).

Bonus: Templates and CloudInit

If you find yourself spinning up Ubuntu VMs like crazy, XCP-ng has some tricks to save a tonne of time.

First, you can download Ubuntu Cloud Images from Ubuntu. Here is the download page for the latest 24.04 LTS Cloud Image. Download the .ova file (noble-server-cloudimg-amd64.ova).

The Cloud Image is cool for a couple of reasons:

- It already has the latest updates (as of download time) applied. Saving a bunch of time on first boot.

- It's already got the finicky stuff done for allowing CloudInit to work.

Import this image into XCP-ng using ("Import" > "VM"), selecting your Pool and SR, and dragging the OVA onto the upload page. If you want, fiddle with the default resource allocations, and hit Import.

Now, in your VM list, navigate to that new VM, select the Advanced Tab, and select "Convert to Template". Doing so will remove this from your VM list, and add it as an option in your template list:

Next, we can create some Cloud configs under "Settings" > "Cloud configs". Here are my sample configs (make sure to adjust and put in your SSH keys)

Now, when creating a new VM, you can choose that new template, select "Custom Config" and select your user/network config. Finetune the settings, and click Create.

Within about 30 seconds you'll have a fresh up-to-date Ubuntu VM running with your user account, your SSH keys, whatever additional packages you like and up-to-date xe-guest-tools installed.

Wrap-up

And that's it. It's time to start spinning up VMs like a madman!

Moving from VMWare on big servers, there are a few downsides:

- These machines don't have fancy dedicated remote management features that compete with the iDRAC system on my Dell servers.

- The per-node maximum storage is very limited. My larger server has 12 3.5" drives in it.

- Data redundancy. With only two drives, I'm either mirroring or not. I can deploy ZFS with the big servers and easily benefit from multiple disk redundancy.

However, I think the positives outweigh the negatives for me for now:

- I'm still running enterprise-grade virtualization software (Xen... it's a big deal)

- I'm no longer running on a deprecated platform (free VMWare ESXi)

- My VM data is now encrypted at rest

- It's almost completely silent.

- It sips power and generates very little heat (a huge win over the big servers that raise the temperature in my crawl space to unsafe levels)

- Orchestra can manage multiple hosts, so when I need more capacity, I can add servers and manage them with one front-end.

- My next move is going to have significantly less back damage (or it would if I got rid of the old servers - I'm not)